Process plants and companies adopt IT trends much more cautiously than their commercial counterparts, since safety and availability must always take priority over cost and space savings. But when new IT trends such as virtualization and thin clients promise to increase safety and availability while lowering costs, process industry firms are compelled to take a closer look.

Virtualization and thin clients were initially adopted for data centers and other commercial IT applications to save money and space. Virtualization allows multiple operating systems and associated applications to run simultaneously on one PC, so fewer PCs are required, yielding obvious savings both in up-front cost and operating costs (Figure 1), and also in reducing space requirements.

For a data center with hundreds of server-level PCs, the lower costs and space savings from virtualization can be very compelling. But for process plants, it’s just not worth it to sacrifice reliability for cost savings, particularly as a typical plant has just a few PCs running server-level applications such as HMIs, historians and I/O servers.

A typical end user expresses his doubts about the benefits of virtualization. “Our process control world is many orders of magnitude smaller, more stable and more contained than the IT world,” says John Rezabek, process control specialist at Ashland, a specialty chemical company. “I would most likely seek a gradual cutover for our HMIs, so we can compare reliability and performance side-by-side with one-off HMI workstations. The physical and functional redundancy of multiple independent HMIs is arguably worth what we pay for the relatively stable and reliable hardware, assuming licensing costs are roughly equal.”

So the burden of proof is on the technology providers, as they must show often skeptical process industry firms that virtualization can do more than save a few bucks by eliminating a couple of PCs.

Is Virtualization More Reliable?

It’s certainly counterintuitive that putting more operating systems and applications onto a single PC instead of the old one operating system/PC model could increase reliability, but that is, in fact, the case when virtualization is applied correctly.

“For disaster recovery, since we are not using auto-provisioning (which would provide additional benefits in this area), the improvements are simply this: If we have a proper backup and a server crash, using virtual servers, we can use any brand/version of server upon which to restore the image,” explains John Dage, PE, the technical specialist for process controls at DTE Energy, an electric utility.

Without virtualization, a server crash could be much more problematic, as it would require the replacement of the server with a new PC. The new PC would need to have the same operating system as the older model, which could be a major issue depending on the vintage of the older operating system. Even if the version of the operating system wasn’t an issue, the configuration of the operating system could be, as server-level applications generally require custom configuration of the operating system.

“Traditional non-virtual ghost images required lots of hands-on work to deal with incompatibility of firmware and peripherals. Virtualization takes that out of the picture,” notes Dage.

Simply put, an operating system and its associated application can be easily mirrored on a second PC in a virtualized environment, and this mirrored instance can be brought online immediately. Even without real-time mirroring, recovery from a PC failure is much quicker with virtualization.

Mallinckrodt LLC is the pharmaceuticals business unit of Covidien, a global healthcare products company. Mallinckrodt is the world’s largest supplier of both controlled substance pain medication and acetaminophen. “When a PC fails, the average time to rebuild a PC has been eight to 10 hours, but the worst case with virtualization is 30 minutes,” notes Tom Oberbeck, a senior electrical engineer at Mallinckrodt.

So reliability is increased not by reducing potential PC failures, but by making recovery from a failure much quicker and simpler. Users can afford to purchase more expensive and more reliable server-class PCs with features such as redundant power supplies and hard disks because there are fewer PCs to purchase, further increasing reliability.

“With virtualization, redundant servers connected to redundant storage area networks that host virtual machines can provide hot-swap fail over capability. If the primary host machine fails, the virtual machines are moved to the secondary host machine on the fly, without interruption,” observes Chuck Toth, an MSEE and a consultant at systems integrator Maverick Technologies.

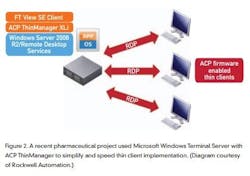

Increased reliability is one of the leading benefits of virtualization (others are listed in Table 1). Chief among these benefits is longer lifecycles for applications, a major boon for process industry firms.

Life Extension

A major complaint about using PCs in process control applications. has been the relatively short lifecycles of operating systems as compared to software applications. For example, an HMI application configured to run on a PC could have a lifecycle of decades, whereas the underlying operating system lifecycle would typically top out at about five years. So a PC failure 10 years into the life of an HMI application would require a new PC with a new operating system, and often major changes to the HMI application. But with a virtualized environment, the lifecycle of the machine no longer depends on the hardware and the operating system, but instead depends on the lifecycle of the hypervisor, which is typically much longer than that of an operating system.

The hypervisor is the software that runs between the PC and the operating systems on a virtualized PC, and it abstracts the PC hardware from the operating system and the application. This is what allows operating systems and associated applications to be moved so easily among PCs in a virtualized environment, whatever the vintage of the PCs.

“With the relatively quick lifecycles of PCs and operating systems compared to the long lifecycles of industrial automation installations, the hardware independence offered by virtualization is very attractive,” says Paul Darnbrough PE, the engineering manager at KDC Systems (www.kdc-systems.com).

In the pharmaceutical industry, replacement of a PC is an event that requires revalidation, upping the cost to astronomical levels. Genentech (www.gene.com), a biotech company based in South San Francisco, Calif., specializes in using human genetic information to develop and manufacture medicines to treat patients with serious or life-threatening medical conditions.

“Genentech estimated that the costs to upgrade one of its Windows 95 PC-based HMIs to a Windows Server 2003-based system would be approximately $40,000,” recounts Anthony Baker, PlantPAx characterization and lab manager at Rockwell Automation (www.rockwellautomation.com). “Final figures topped $100,000 because of costs associated with validating the system for use in a regulated industry,” adds Baker.

“Assuming that the operating systems are updated about every five years, costs quickly become a limiting factor in keeping an installed base of PCs up-to-date. Additional factors like computer hardware changes also contribute to the cost of upgrades, as each change incurs engineering expenses and possibly production downtime,” notes Baker.

Instead of investing in costly hardware and software upgrades, Genentech implemented virtualization.

According to Dallas West, the cell culture automation group leader at Genentech, one of the most lasting effects of virtualization is that it allows legacy operating systems, such as Windows 95 and Windows NT, to be run successfully on computers manufactured today. This extends HMI product lifecycles from five to seven years to 10 to 15 years and possibly longer.

“Having the ability to extend the useful life of a computer system allows a manufacturer to create a planned, predictable upgrade cycle commensurate with its business objectives,” West says.

“No longer is a business forced to upgrade its systems because a software vendor has come out with a new version. Upgrading systems can once again be driven by adding top-line business value, and by choosing to upgrade only when new features become available that will provide an acceptable return on investment.” he adds.

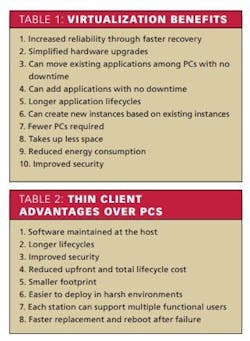

Extending the lifecycle of an application cuts costs, and increasing reliability saves money by avoiding downtime. But the most direct cost savings of virtualization are found by simply reducing the number of PCs (Figure 2), while maintaining all of the functionality of a traditional one operating system/PC configuration.

And It’s Cheaper

A pharmaceutical application puts some hard numbers on savings realized by virtualization. “Gallus BioPharmaceuticals and Malisko Engineering recently deployed a new Rockwell Automation PlantPAx process automation system that replaced six non-virtualized servers with two virtual servers, saving 60% of the server costs, or $20,000,” says Steve Schneebeli, the lead systems engineer at Malisko Engineering.

Similarly, Mallinckrodt saved big on a recent central utilities upgrade project by using virtualization to reduce the required number of PCs from 22 to just 13 physical PCs and 9 virtual machines. “Upgrading the current existing applications on the 22 PCs was significantly more than the cost of the entire new VM infrastructure. Virtualization produced an initial cost avoidance of $120,000, and a future projected cost avoidance of an additional $100,000,” says Mallincrodt’s Oberbeck. “We anticipate reduced downtime when dealing with production issues, and when making process improvements. Maintenance costs will be cut, and there will be substantial energy savings.”

Fewer PCs also means less points of entry for hackers and other intruders, reducing costs of compliance, and making it simpler to secure the system. Most all virtualized systems are housed in secure, protected and climate-controlled locations, but many users require access to the applications running on these systems from the plant floor or the field, which is an area that’s a great fit for another data center technology—thin clients.

Thin Clients

When users want to access virtualized systems, the two main choices are PCs and thin clients. For offices and other protected environments, PCs are usually the best choice because in most cases the PCs will be used for other tasks in addition to process control and monitoring.

But on the plant floor and in the field, thin clients have a number of advantages over thick client PCs (Table 2). “The beauty of thin clients is that, if the operator console fails, it can be swapped out in minutes. Once reconnected to the virtual machine, the operator picks up where he or she left off, saving hours of precious time,” explains Maverick’s Toth.

A recent pharmaceutical project realized a cost savings of 33% and a footprint reduction of 50% by using thin instead of thick clients. “With thin clients, users can create ‘follow-me’ operator workstations that can follow a single operator from thin client to thin client in a large plant, instead of creating a thick client at each physical location,” explains Schneebeli of Malisko.

A recently completed water/wastewater utility plant design provided eight operator workstations around the plant using thin clients rather than workstations as in the past. The initial benefit is greatly reduced workstation costs and HMI licensing.

Thin clients can be either fixed operator interface terminals, or portable wireless devices. “Virtualization provides a path to enabling workers to access their workstations on a variety of hardware platforms,” observes Rockwell Automation’s Baker.

“From tablets to thin clients, users can access their workstations from anywhere they have Internet access inside or outside of the plant. The desktops no longer run on the local hardware, but instead are securely running back in the data center. Users will be able to view and interact with their desktops through new virtualization desktop infrastructure products,” adds Baker.

Another supplier offers a host of thin client solutions based on open standards. “We provide several workstations and panel products that include the Windows Remote Management designation for thin client virtualization applications,” says Louis Szabo, business development manager at Pepperl+Fuchs.

Its customers are taking advantage of thin-client visualization of virtualized systems in a number of ways. “In a silicon processing application, thin clients are used in facilities monitoring water treatment and HVAC systems. A keyboard, video, mouse (KVM) extender wasn’t feasible due to distances involved, and a full PC was too expensive due to hardware and software costs. Thin clients were already employed on our customer’s business systems, so with the DCS vendor’s recommendation, the customer decided to utilize thin clients for remote monitoring,” explains Szabo.

Virtualization and thin clients are being used in many process automation applications, either together or separately. The future promises to bring these technologies to the forefront, but virtualization in particular will require a change in mindset among suppliers, system integrators and end users.

The Future

Thin clients are already in widespread use among process control plants, but virtualization is catching on more slowly, in some cases because end users and system integrators are waiting on automation suppliers. “The major HMI software vendors have begun to offer some products that are ‘virtualization- ready’ for a production environment within the last few years. Therefore, even though virtualization is a proven technology in the IT world, it is still fairly young in the industrial automation world,” says Darnbrough of KDC Systems.

“Many of our larger and municipal customers will be slow to adopt virtualized systems in their specifications, but we anticipate starting up a cogeneration, balance-of-plant system at a major customer site this year with an HMI that takes advantage of virtualization,” notes Darnbrough.

HMI software vendors and other suppliers of server-level software applications have only recently begun to offer virtualization-ready products, but their offerings are growing rapidly, and are being packaged for quick installation and use.

“Most recently, Rockwell Automation introduced virtual image templates for our PlantPAx process automation system, including a system server, operator workstation and engineering workstation. These templates are preinstalled with PlantPAx software, and are delivered as images on a USB hard drive, helping to reduce validation costs and system deployment time,” explains Baker.

“Setting up and installing a complete process automation system traditionally takes several days, with various operating systems, software packages, and patches. However, with a virtual image template of each server and station, system components can be deployed in minutes. The templates are distributed in Open Virtualization Format (OVF). OVF is an open standard for distributing virtual machines, and is compatible with a number of virtualization solution providers,” concludes Baker.

ABB has embraced virtualization technology for its entire server/client architecture. “Our System 800xA is factory tested and fully documented using virtualization technology,” says Roy Tanner, 800xA global product marketing channel manager for Americas at ABB.

“Testing is done to ensure performance levels meet our customer’s most demanding applications. Our entire automation platform can run in a virtualized environment, including redundant servers that are connected to controller networks, application servers (historians, asset optimization, batch management, etc.) and even operator clients,” adds Tanner.

This is what’s happening now, but what does the future possibly hold as data center technologies move to process automation?

“I anticipate three main advancements in the next 10 years,” predicts Paul Hodge, product manager for virtualization at Honeywell Process Solutions. “It will be simpler to support more advanced virtualization features, such as high availability and transparent patching and upgrades. We’re doing this already with our turnkey blade server offering, and easy access to advanced features will continue to evolve.

“Virtualization has abstracted users from having to deal with physical hardware, but they now need to interface with the hypervisor. Private cloud technology will gradually be adopted in the process automation industry, and will abstract users from being exposed to the hypervisor. This transformation will allow users to be more application-centric,” explains Hodge.

“Finally, there will be increased use of virtual appliances, which are virtual machines containing the selected application with a bare bones and properly configured operating system. The idea is that the application and operating system are one. If the appliance needs to be upgraded, both the application and operating system will be upgraded at the same time. Users will only need to turn the virtual appliance on in the same way they would a DVD player or TV,” foresees Hodge.

Virtualization and thin client visualization are growing rapidly in the process industries due to lower costs, greater reliability and longer lifecycles. Further advancements promise to increase adoption rates, propelling process automation systems to a brave new world that takes advantage of IT advances, while improving safety, availability and security.

This article was originally published by ControlGlobal.com. Dan Hebert PE is Control’s senior technical editor.