Slow rush to the cloud

From supply chains to the mobile workforce to technology transfer, the pharma industry is figuring out where it makes the most business and operational sense to leverage cloud technologies. Many production systems today can be implemented in the cloud, but there are still very good reasons for keeping some of them on-premise for the foreseeable future.

As organizations consider which systems to move to the cloud and which to keep on-prem, they must consider how to protect both data and process control, while still making it easy to get critical data out of the system to enable enterprise-wide business decisions. Network latency, security and the role of individual systems in business processes should guide any strategy.

A new approach

For years, the Purdue Model — an industrial control systems architecture reference model — has been the gold standard for separating enterprise and control system functions into hierarchical zones for improved security and performance. More recently, however, the rapid expansion of IoT devices, along with edge and cloud technologies, has disrupted this hierarchical structure of data flow.

Today, manufacturers rely on data sharing between different levels of the organization and need an architecture to support data flow. The best solutions are tiered —with a mix of local, cloud and hybrid architectures — driven by the needs of plant systems and functions.

A three-tiered system, consisting of an on-prem control layer, a hybrid real-time production layer, and a cloud-based enterprise layer helps promote successful and secure operations while ensuring data is not trapped in silos, which impede business decisions and technology transfer.

The on-prem control layer

Control components and systems must be fully protected because any incidents occurring at the control level will have the highest impact on safety and production. All components operating at the control layer benefit from low-latency, high-speed and fully redundant networking solutions. Because these are the most mission-critical and time sensitive systems — usually residing on the plant floor — cloud hosting is generally not a reliable or secure enough option. The control layer is typically hosted on-prem to ensure software and equipment have the fastest, most reliable and most secure connections.

Closed-loop process control

Process robotics, machine and equipment control typically reside in the control layer. As the heart of operations for production, the control system always needs a high-speed, low-latency connection to plant equipment and personnel.

Moreover, in continuous control and batch executive operations, many plants use coordination and advanced control strategies, which directly impact lab and production equipment. Continuous operation strategies such as cross-unit coordination drive production processes to a desired state and ensure successful lot production. This cross-unit coordination provides feed-forward and feedback events and triggers, where timing is critical. Keeping the control system in the same physical location as plant equipment ensures network latency or internet outages do not create problems in coordinated responses.

Safety and equipment health

High-speed, low-latency operation is also essential for safety, and for functionality specific to machinery health. The fast network operation available locally at the control layer enables processes and equipment to communicate state changes to trigger interlocks — essential functionality that cannot risk delays from a network outage.

In addition, executing health management functions for critical or hazardous equipment at the control layer enables fast response or trips on machines experiencing faults before they can cause damage or create safety risks.

Easily moving data

Nearly all process data flows through the control layer. Even though the control layer will not be hosted in the cloud, organizations still need a method to gather process data, and to pass it securely across the site and enterprise for better business decisions.

Typically, plants do not want to send control data thousands of miles away to a data center for processing, nor do they want to introduce the security risk of opening the control layer to external internet-connected systems. Edge computing solutions solve this problem by bringing computation and data storage closer to the devices generating data in the control layer.

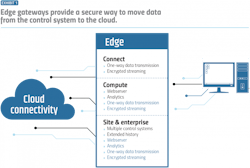

One key component for implementing edge communication, the edge gateway, provides a solution to securely collect data from the control system, without impacting its performance or uptime (Exhibit 1).

Dedicated security features like one-way data diodes with encrypted messaging can deliver essential data from the plant to enterprise systems without exposing control technologies to the internet. In addition, they remove the need for the complex configuration and the context loss created by sending data to a third-party system such as a historian.

Secure edge gateway configurations function as a one-way IT/OT bridge to pull production data to higher levels of the enterprise. These solutions can also be scaled to support edge analytics, or to contextualize and deliver data from multiple control systems to a hybrid cloud or cloud for enterprise-wide analysis.

The hybrid production layer

Many pharma production systems rely on real-time data to allow systems and personnel to quickly respond to incidents. These systems perform real-time or near-real-time manufacturing automation and optimization directly impacting production. As a result, they require the security and low latency most easily provided by on-prem technology.

However, real-time production systems also regularly need fast and intuitive connectivity to cloud enterprise systems. These solutions provide access to the corporate-wide material, as well as laboratory and planning information, along with the site-based dashboarding and inventory management necessary to make strategic decisions. Access to these systems must be tightly secured but does not require the low latency of production systems (Exhibit 2).

With a hybrid production layer, these systems can be hosted locally on virtual machines to ensure real-time data connectivity, while still providing background cloud connectivity, with front-end interfaces replicating cloud systems.

Manufacturing execution

Many of the real-time systems essential to improved manufacturing execution benefit from stable, low-latency connectivity to plant hardware and systems. In systems like order execution, real-time material management and real-time equipment state management, a delay of even a few seconds can create complications in production, and impact quality or safety.

Today, most production facilities run real-time systems at the production layer to provide low-latency performance, while still making it easy to transfer critical data to enterprise systems in the cloud for more centralized management and performance evaluation.

Continuous monitoring and predictive maintenance

Closed-loop process analytical technology (PAT) and real-time exception (RTE) management also run best on local hardware with access to cloud systems. Like manufacturing execution systems, real-time quality control applications are constantly connected to plant hardware, and they often require real-time alert delivery and response. Plant personnel rely on PAT and RTE systems to deliver critical messages to mobile devices as soon as they occur, enabling staff to respond and intervene before an anomaly impacts production.

In addition, enterprise personnel need the same data — though not in real-time — to track and trend performance of individual production centers across multiple locations. As a result, maintenance and production optimization systems typically perform better in a hybrid environment where they are hosted on local hardware for fast notifications to on-site personnel, but can seamlessly connect and deliver data to cloud systems. Cloud connectivity drives enterprise analytics and predictive modeling — information that is no less critical but far less time sensitive.

Scheduling

Modern digital scheduling tools offer powerful, real-time scheduling to monitor current production and automatically adjust future production. For high-volume, low-margin plants, this scheduling often necessitates instantaneous status updates, where delays due to network outages or latency cannot be tolerated.

Scheduling software hosted virtually with on-prem equipment offers the best of both worlds, providing the performance necessary to continually update scheduling accurately, while also providing fast cloud connectivity to schedule data for monitoring, trending and adjustment at the enterprise level.

Data lakes

Pharma manufacturers rely on real-time manufacturing data repositories to facilitate easier technology transfer. Research, reliability, operations, maintenance and other departments may all need to collect and use OT data. Data lakes connect the wide variety of software packages used by different departments. They are used to store, standardize and share data in real-time across every stage of a product, from development to production.

Many organizations opt to host data lakes in the cloud. However, other organizations are hesitant to store data in a cloud repository for security and time-sensitivity reasons. These organizations often look to on-prem data lake solutions to improve connectivity and contextualization of data, without relinquishing control of sensitive information.

Such an approach allows organizations far greater control over the data available inside and outside of the corporate network. This ensures continuous access to real-time data without limiting the effectiveness of key enterprise technologies such as advanced analytics, process knowledge management (PKM), and enterprise resource planning (ERP) systems.

The cloud enterprise layer

Enterprise expertise takes a long-term view of operations across time and multiple plants. As a result, data for these tasks does not typically need to be on-site, nor is it heavily reliant on real-time delivery.

Full cloud-based solutions are particularly valuable at the enterprise level, where systems perform less time-sensitive tasks. While cloud systems are highly stable and often boast uptime of higher than 99.9%, scheduled or unscheduled service outages will likely still occur. These outages are more easily tolerated by business-level systems, which are important but won’t cause production, safety or quality issues in the event of downtime.

Analytics

Advanced analytics systems use multi-source data coupled with artificial intelligence (AI) and machine learning (ML) to create the powerful predictive technologies enabling speed-to-market.

For analytics systems performing non-real-time multi-site analysis, hardware requirements tend to increase quickly. As a result, investing in on-prem equipment to run models and engines is often cost prohibitive. Cloud systems, however, scale quickly, easily and affordably to keep pace with the needs of complex AI and ML models. Moreover, analytics software in the cloud is regularly updated, ensuring users always have access to the latest and greatest models to drive better production and higher quality.

Cloud hosted applications make it easier to integrate across systems such as performance reporting, facility and site dashboards, ERP and laboratory information management systems. In a growing number of facilities, cloud connectivity continues down to systems in the hybrid space, like electronic lab notebooks and manufacturing execution systems.

Process knowledge and management (PKM)

PKM applications improve technology transfer and collaboration across the enterprise. These systems thrive in a cloud environment, where anyone in the organization — regardless of location — can quickly access data they need from any stage of therapy development. Process development, material science, technology and other personnel can collaborate to scale recipes and more easily execute technology transfer from anywhere in the world.

Facility modeling and debottlenecking

Manufacturers use modeling and digital twin simulation for a variety of production needs, from testing process changes and eliminating production bottlenecks, to performing predictive maintenance tasks, to training operators to improve performance, quality and safety.

High-fidelity simulations, which start large and continue to grow as new process areas and models are added, are ideal for cloud hosting, where storage and processing power are easily and affordably scalable at a moment’s notice (Exhibit 3).

Picking the right architecture

While not all systems need to be hosted on-site, neither is every system ready to run in the cloud. Fortunately, organizations don’t need to choose one solution or the other. Instead of rushing to the cloud, organizations should focus on security, performance and safety needs to select an array of architectures supporting equipment and personnel, while maintaining a secure, productive environment.

With the wide range of cloud, hybrid and edge solutions available, it is easy to find the right mix of hosting technologies to improve performance, throughput, and speed to market — while still protecting key elements of development and production such as quality, runtime and security.